Training

Fox World Travel Concur Support Desk

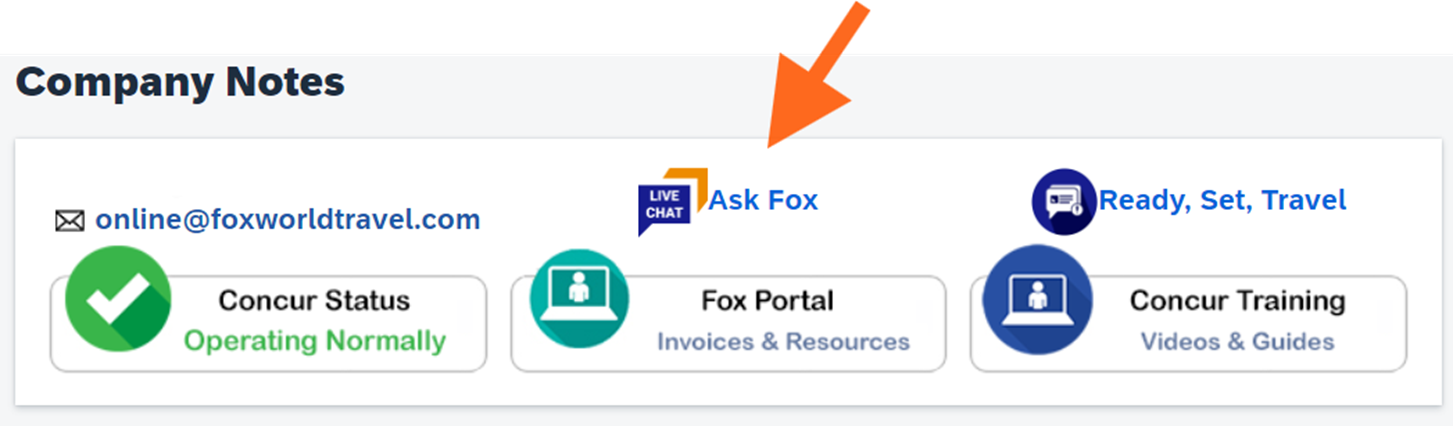

Fox World Travel is available to support your Concur questions. Contact support via phone at 608-710-4172, via email at online@foxworldtravel.com or via chat in the Concur application.

Additional Support

Contact your institution’s travel manager for additional support questions.

Personalized SAP Concur Open Updates

Personalized up-to-the-minute service availability and performance information

-

OPI-6188291 : US2 : Travel : Issue Identified and Resolved

21 January 2026 | 3:37 pm

A system outage caused a delay in posting incident communications. The issue has since been resolved, and communications for incidents that occurred during the outage are now being published. Most New Concur Travel customers were unable to perform successful travel searches for 50 minutes. Our internal monitoring tools measuring successful travel submissions detected a 95% drop in travel submissions during the incident. Further investigation confirmed successful travel submissions were impacted by an error, "Sorry something went wrong" after entering your desired search parameters and clicking the Search button when performing air, car, and hotel searches.. If you experienced impact, you may have been able to successfully perform a search by trying again. The Incident Response Team (IRT) quickly took action to revert a recent change to restore service. We have verified that service performance is stable and will now resolve this incident. An investigation into the root cause of this incident will now be conducted, and a root cause analysis report provided when that investigation is complete.

-

OPI-6182038 : US2 : Travel : Issue Identified and Resolved

9 January 2026 | 4:45 pm

New Concur Travel users experienced an issue completing travel bookings. Our internal observability tools detected a 100% drop in travel bookings for approximately 17 minutes. Affected users may have encountered the following error when attempting to complete their travel booking from the Review and Book page, "Sorry, something went wrong." There was no workaround identified during service disruption. As the Incident Response Team (IRT) was gathering, services self-recovered. We have verified that service performance is stable and will now resolve the incident. An investigation into the root cause of this incident will now be conducted, and a root cause analysis report provided when that investigation is complete.